Right to Explanation: Designing a Visual Protocol for Explaining Algorithmic Decisions (XAI)

The use of artificial intelligence in financial services (FinTech, insurance, banking) is universal. AI models now autonomously assess creditworthiness, set insurance premiums, approve loans, and manage investments. The problem is that these models are often “Black Boxes,” even for the people who built them.

If a bank cannot meaningfully and clearly explain to a client why their loan application was rejected, it is immediately exposed to substantial legal risks and regulatory penalties.

The Collision Between GDPR and the “Black Box”

The risk is twofold and extremely high for the financial sector:

- GDPR (Article 22 Automated individual decision-making, including profiling – Right to Explanation):

GDPR gives clients the absolute right to request a meaningful explanation for any decision made solely by automated means that produces a legal effect (for example, a loan rejection or cancellation of insurance based on behavioral analysis). An explanation full of legal or technical jargon is not legally acceptable. - EU AI Act (High-Risk System):

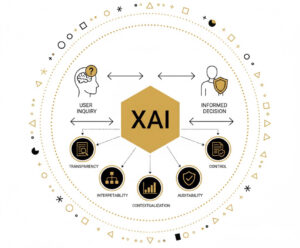

AI systems used for evaluating creditworthiness or financial risk are classified as High-Risk. This means they must meet strict requirements for transparency, human oversight, and, most importantly, objective interpretability of results (XAI – Explainable AI).

Failure to provide a meaningful explanation jeopardizes clients’ fundamental rights and exposes institutions to maximum penalties. - LDT and XAI: From Technical Forensics to Legal Transparency

Explainable AI (XAI) is a technical tool for deconstructing a model. Legal Design Thinking (LDT) is a tool for transforming those technical insights into a legally valid and human-readable format.

LDT is used to design the Visual Explanation Protocol:

- Visual Map of Decision Factors

Translate complex weighted factors (used by the AI model) into clear visuals.

When AI rejects a loan, LDT designs an interface that does not deliver a generic message but instead shows a graphic breakdown of the main factors.

For example, the client sees a diagram showing: Late payment history contributed 55% to the negative decision; Income level 30%; Lack of collateral 15%.

This satisfies the GDPR requirement for a “meaningful explanation” because the client can clearly see why they were rejected and what they can improve. - Plain Language Notification Protocol

Ensure that even the written explanation is legally correct and understandable.

LDT creates notification templates written in Plain Language. Instead of citing legal articles, the explanation is action-oriented:

“Our decision is based on the fact that your current liabilities exceed the legal limit for your income level. Recommendation: reduce debt by X% and reapply in 30 days.” - Auditability Dashboard

Provide legal proof for regulators.

LDT designs an internal dashboard for legal and compliance teams that automatically records all factors that led to the rejection.

During a regulatory inspection, the bank can immediately show visual evidence that the decision-making process was fair, unbiased, and fully compliant.

Financial institutions can no longer hide their decisions behind algorithmic “Black Boxes.” LDT is essential because it transforms the technical complexity of XAI into legal transparency. By designing a Visual Explanation Protocol, banks not only avoid maximum penalties but also build essential trust in the critical financial services sector.

Is your AI “Black Box” ready to be legally and visually opened?

Other blogs

CSRD: When ESG becomes a personal risk. How Evidence Architecture Moves ESG from Sustainability to Responsibility

March was traditionally reserved for closing the financial books. But from 2026...

Who will sign? CSRD and the end of collective responsibility in regional companies

March in the Balkans is traditionally a month of final accounts. But in 2026, March brings…